Introducing YOLOBench by Deeplite!

A benchmark of over 900 YOLO-based object detectors

We are excited to release YOLOBench, a latency-accuracy benchmark of over 900 YOLO-based object detectors for embedded use cases on different edge hardware platforms. Accepted at the ICCV 2023 RCV workshop, you can read the full paper on arXiv.

Check out the interactive YOLOBench app on HuggingFace Spaces where you can find the best YOLO model for your edge device!

Questions? Contact us at yolobench@deeplite.ai!

AI-Driven Optimization to make Deep Neural Networks faster, smaller and energy-efficient from cloud to edge computing

Make AI accessible and affordable to benefit everyone's daily life.

Enable Edge Computing

Create new possibilities by bringing AI computation to every day devices such as cars, drones and cameras.

Economize on Data Centers

Faster DNNs lowers the costs on cloud and hardware back-ends, helping businesses scale their AI services.

Faster Time-to-Market

Automated design space exploration can drastically decrease development efforts by easily finding robust designs.

Introducing Neutrino™

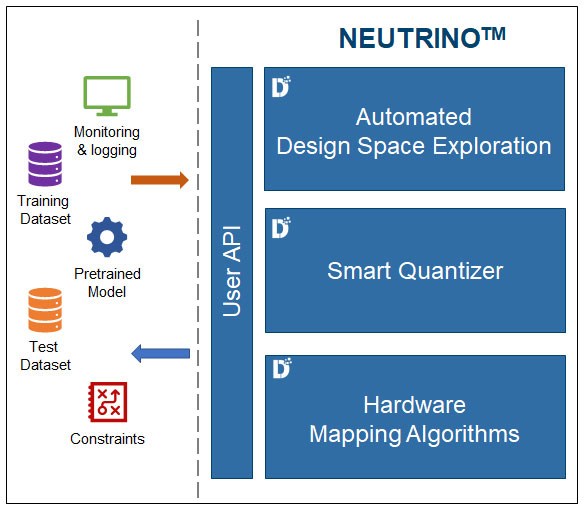

Deeplite Neutrino™ leverages a novel multi-objective design space exploration approach to automatically optimize high-performance DNN models, making them dramatically faster, smaller and power-efficient without sacrificing performance for real-time and resource-limited AI environments.

Easy-to-use

Neutrino™ provides power API to seamlessly fit into your routine workflow with minimal effort. The engine is designed to be intuitive and integrated with existing AI frameworks.

Design Space Exploration

Neutrino™ delivers a fully automated, multi-objective design space exploration with respect to operational constraints, producing highly-compact deep neural networks.

Smart Quantizer

Neutrino™ exploits low precision weights using highly-efficient algorithms that learn an optimal precision configuration across the neural network to get the best out of the target platform.

DeepliteRT

Accelerate computer vision ML inference with high-performance, 2-bit quantization runtime for Arm Cortex-A CPUs.

· Deploy advanced video analytics and computer vision features on cost-effective Arm CPUs.

· Faster time-to-market and compatibility for existing mobile devices, surveillance cameras, and machine vision systems.

· Lower-cost hardware solutions than developing custom GPU or NPU hardware designs.

Smart 2-Bit Quantization

World leading model optimization that leverages training aware 2-bit quantization to retain model accuracy while reducing memory bandwith.

Arm CPU Runtime

Fastest Arm Cortex-A Neon runtime. Provides compute optimization that delivers the highest inference performance and power efficiency.

PyTorch Optimization

Optimal deployment path for PyTorch vision models for Arm-based embedded systems.

Solutions

Our result is a robust AI on cost-effective hardware platforms. Our customers use our solutions to maximize their investments in AI experts and scale their deep learning development with one standard software.

Automotive

Real-time deep learning on low-power processors to help move people and things around the planet safely.

Learn More

Smartphones

On-device AI for an engaging experience that captivates the user and conserves battery life.

Learn More

Smart Cameras

Understand, analyze and make predictions from video and images in an instant using real-time deep learning.

Learn More

IoT Devices

Deploy deep learning on edge devices and smart sensors to generate new insights and intelligence.

Learn More